New post at the new blog location, here. Enjoy!

The world is on fire, but not because of C23

New ad-free location: https://davmac.org/blog/the-world-is-on-fire-but-not-c23.html

An article was published on acmqueue last month entitled “Catch-23: The New C Standard Sets the World on Fire”. It’s a bad article; I’ll go into that shortly; it comes on the back of similar bad arguments that I’ve personally either stumbled across, or been involved in, lately, so I’d like to respond to it.

So what’s bad about the article? Let me quote:

Progress means draining swamps and fencing off tar pits, but C23 actually expands one of C’s most notorious traps for the unwary. All C standards from C89 onward have permitted compilers to delete code paths containing undefined operations—which compilers merrily do, much to the surprise and outrage of coders.16 C23 introduces a new mechanism for astonishing elision: By marking a code path with the new

unreachableannotation,12 the programmer assures the compiler that control will never reach it and thereby explicitly invites the compiler to elide the marked path.

The complaint here seems to be that the addition of the “unreachable” annotation introduces another way that undefined behaviour can be introduced into a C program: if the annotation is incorrect and the program point is actually reachable, the behaviour is undefined.

The premise seems to be that, since undefined behaviour is bad, any new way of invoking undefined behaviour is also bad. While it’s true that undefined behaviour can cause huge problems, and that this has been a sore point with C for some time, the complaint here is fallacious: the whole point of this particular annotation is to allow the programmer to specify their intent that a certain path be unreachable, as opposed to the more problematic scenario where a compiler determines code is unreachable because a necessarily-preceding (“dominating”) instruction would necessarily have undefined behaviour. In other words it better allows the compiler to distinguish between cases where the programmer has made particular assumptions versus when they have unwittingly invoked undefined behaviour in certain conditions. This is spelled out in the proposal for the feature:

It seems, that in some cases there is an implicit assumption that code that makes a po-

tentially undefined access, for example, does so willingly; the fact that such an access is

unprotected is interpreted as an assertion that the code will never be used in a way that

makes that undefined access. Where such an assumption may be correct for highly spe-

cialized code written by top tier programmers that know their undefined behavior, we are

convinced that the large majority of such cases are just plain bugs

That is to say, while it certainly does add “another mechanism to introduce undefined behaviour”, part of the purpose of the feature is actually to limit the damage and/or improve the diagnosis of code which unintentionally invokes undefined behaviour. It’s concerning that the authors of the article have apparently not read the requisite background information, or have failed to understand it, but have gone ahead with their criticism anyway.

In fact, they continue:

C23 furthermore gives the compiler license to use an

unreachableannotation on one code path to justify removing, without notice or warning, an entirely different code path that is not markedunreachable: see the discussion ofputs()in Example 1 on page 316 of N3054.9

When you read the proffered example, you quickly notice that this description is wrong: the “entirely different code path” is in fact dominated by the code path that is marked unreachable and thus is, in fact, the same code path. I.e. it is not possible to reach one part without reaching the other, so they are on the same path; if either are unreachable, then clearly the other must also be; if one contains undefined behaviour then the entire code path does and (just as before C23) the compiler might choose to eliminate it. Bizarrely, the authors are complaining about a possible effect of undefined behaviour as if it was a new thing resulting from this new feature.

The criticism seems to stem at least partly from the notion that undefined behaviour is universally bad. I can sympathise with this to some degree, since the possible effects of UB are notorious, but at the same time it should be obvious that railing against all new undefined behaviour in C is unproductive. There will always be UB in C. The nature of the language, and what it is used for, all but guarantee this. However, it’s important to recognise that the UB is there for a reason, and also that not all UB is of the “nasal daemons” variety. While those who really understand UB can often be heard to decry “don’t do that, it’s UB, it might kill your dog” there is also an important counterpoint: most UB will not kill your dog.

“Undefined behaviour” does not, in fact, mean that a compiler must cause your code to behave differently than how you wanted it to. In fact it’s perfectly fine for a compiler (“an implementation”) to offer behavioural guarantees far beyond what is required by the language standard, or to at least provide predictable behaviour in cases that are technically UB. This is an important point in the context of another complaint in the article:

Imagine, then, my dismay when I learned that C23 declares

realloc(ptr,0)to be undefined behavior, thereby pulling the rug out from under a widespread and exemplary pattern deliberately condoned by C89 through C11. So much for stare decisis. Compile idiomaticrealloccode as C23 and the compiler might maul the source in most astonishing ways and your machine could ignite at runtime.16

To be clear, “realloc(ptr,0)” was previously allowed to return either NULL, or another pointer value (which is not allowed to be dereferenced). Different implementations can (and do) differ in their choice. While I somewhat agree that making this undefined behaviour instead is of no value, I’m also confident that it will have next to zero effect in practice. Standard library implementations aren’t going to change their current behaviour because they won’t want to break existing programs, and compilers won’t treat realloc with size 0 specially for the same reason (beyond perhaps offering a warning when such cases are detected statically). Also, calling code which relies on realloc returning a null pointer when given a 0 size “idiomatic” is a stretch, “exemplary” is an even further stretch, and “condoned by C89 through C11” is just plain wrong; the standard rationale suggests a practice for implementations, not for applications.

Later, the authors reveal serious misunderstandings about what behaviour is and is not undefined:

Why are such requests made? Often because of arithmetic bugs. And what is a non-null pointer from

malloc(0)good for? Absolutely nothing, except shooting yourself in the foot.It is illegal to dereference such a pointer or even compare it to any other non-null pointer (recall that pointer comparisons are combustible if they involve different objects).

This isn’t true. It’s perfectly valid to compare pointers that point to different objects. Perhaps the author is confusing this with the use of a pointer to an object whose lifetime has ended, or calculating the difference between pointers; it’s really not clear. Note that the requirement (before C23) for a 0-size allocation was that “either a null pointer is returned, or the behavior is as if the size were some nonzero value, except that the returned pointer shall not be used to access an object”; there’s no reason why such a pointer couldn’t be compared to another in either case.

It’s a shame that such hyperbolic nonsense gets published, even in on-line form.

Forgetting about the problem of memory

There’s a pattern that emerged in software some time ago, that bothers me: in a nutshell, it is that it’s become acceptable to assume that memory is unlimited. More precisely, it is the notion that it is acceptable for a program to crash if memory is exhausted.

It’s easy to guess some reasons why this the case: for one thing, memory is much more prevalent than it used to be. The first desktop computer I ever owned had 32kb of memory (I later owned a graphing calculator with the same processor and same amount of memory as that computer). My current desktop PC, on the other hand, literally has more than a million times that amount of memory.

Given such huge amounts of memory to play with, it’s no wonder that programs are written with the assumption that they will always have memory available. After all, you couldn’t possibly ever chew up a whole 32 gigabytes of RAM with just the handful of programs that you’d typically run on a desktop, could you? Surely it’s enough that we can forgot about the problem of ever running out of memory. (Many of us have found out the unfortunate truth, the hard way; in this age where simple GUI apps are sometimes bundled together with a whole web browser – and in which web browsers will happily let a web page eat up more and more memory – it certainly is possible to hit that limit).

But, for some time, various languages with garbage-collecting runtimes haven’t even exposed memory allocation failure to the underlying application (this is not universally the case, but it’s common enough). This means that a program written in such a language that can’t allocate memory at some point will generally crash – hopefully with a suitable error message, but by no means with any sort of certainty of a clean shutdown.

This principle has been extended to various libraries even for languages like C, where checking for allocation failure is (at least in principle) straight-forward. Glib (one of the foundation libraries underlying the GTK GUI toolkit) is one example: the g_malloc function that it provides will terminate the calling process if requested memory can’t be allocated (a g_try_malloc function also exists, but it’s clear that the g_malloc approach to “handling” failure is considered acceptable, and any library or program built on Glib should typically be considered prone to unscheduled termination in the face of an allocation failure).

Apart from the increased availability of memory, I assume that the other reason for ignoring the possibility of allocation failure is just because it is easier. Proper error handling has traditionally been tedious, and memory allocation operations tend to be prolific; handling allocation failure can mean having to incorporate error paths, and propagate errors, through parts of a program that could otherwise be much simpler. As software gets larger, and more complex, being able to ignore this particular type of failure becomes more attractive.

The various “replacement for C” languages that have been springing up often have “easier error handling” as a feature – although they don’t always extend this to allocation failure; the Rust standard library, for example, generally takes the “panic on allocation failure” approach (I believe there has been work to offer failure-returning functions as an alternative, but even with Rust’s error-handling paradigms it is no doubt going to introduce some complexity into an application to make use of these; also, it might not be clear if Rust libraries will handle allocation failures without a panic, meaning that a developer needs to be very careful if they really want to create an application which can gracefully handle such failure).

Even beyond handling allocation failure in applications, the operating system might not expect (or even allow) applications to handle memory allocation failure. Linux, as it’s typically configured, has overcommit enabled, meaning that it will allow memory allocations to “succeed” when only address space has actually been allocated in the application; the real memory allocation occurs when the application then uses this address space by storing data into it. Since at that point there is no real way for the application to handle allocation failure, applications will be killed off by the kernel when such failure occurs (via the “OOM killer”). Overcommit can be disabled, theoretically, but to my dismay I have discovered recently that this doesn’t play well with cgroups (Linux’s resource control feature for process groups): an application in a cgroup that attempts to allocate more than the hard limit for the cgroup will generally be terminated, rather than have the allocation fail, regardless of the overcommit setting.

If the kernel doesn’t properly honour allocation requests, and will kill applications without warning when memory becomes exhausted, there’s certainly an argument to be made that there’s not much point for an application to try to be resilient to allocation failure.

But is this really how it should be?

I’m concerned, personally, about this notion that processes can just be killed off by the system. It rings false. We have these amazing machines at our disposal, with fantastic ability to precisely process data in whatever way and for whatever purpose we want – but, prone to sudden failures that cannot really be predicted or fully controlled, and which mean the system at a whole is fundamentally less reliable. Is it really ok that any process on the system might just be terminated? (Linux’s OOM uses heuristics to try and terminate the “right” process, but of course that doesn’t necessarily correspond to what the user or system administrator would want).

I’ve discussed desktops but the problem is still a problem on servers, perhaps more so; wouldn’t it be better if critical processes are able to detect and respond to memory scarcity rather than be killed off arbitrarily? Isn’t scaling back, at the application level, better than total failure, at least in some cases?

Linux could be fixed so that OOM was not needed on properly configured systems, even with cgroups; anyway there are other operating systems that, reportedly, have better behaviour. That would still leave the applications which don’t handle allocation failure, of course; fixing that would take (as well as a lot of work) a change in developer mindset. The thing is, while the odd application crash due to memory exhaustion probably doesn’t bother some, it certainly bothers me. Do we really trust that applications will reliably save necessary state at all times prior to crashing due to a malloc failure? Are we really ok with important system processes occasionally dying, with system functionality accordingly affected? Wouldn’t it be better if this didn’t happen?

I’d like to say no, but the current consensus would seem to be against me.

Addendum:

I tried really hard in the above to be clear how minimal a claim I was making, but there are comments that I’ve seen and discussions I’ve been embroiled in which make it clear this was not understood by at least by some readers. To sum up in what is hopefully an unambiguous fashion:

- I believe some programs – not all, not even most – in some circumstances at least, need to or should be able to reliably handle an allocation failure. This is a claim I did not think would be contentious, and I haven’t been willing to argue it as that wasn’t the intention of the piece (but see below).

- I’m aware of plenty of arguments (of varying quality) why this doesn’t apply to all programs (or even, why it doesn’t apply to a majority of programs). I haven’t argued, or claimed, that it does.

- I’m critical of overcommit at the operating system level, because it severely impedes the possibility of handling allocation failure at the application level.

- I’m also critical of languages and/or libraries, which make responding to allocation failure difficult or impossible. But (and admittedly, this is exception wasn’t explicit in the article) if used for an application where termination on allocation failure is acceptable, then this criticism doesn’t apply.

- I’m interested in exploring language and API design ideas that could make handling allocation failure easier.

The one paragraph in particular that I think could possibly have caused confusion is this one:

I’m concerned, personally, about this notion that processes can just be killed off by the system. It rings false. We have these amazing machines at our disposal, with fantastic ability to precisely process data in whatever way and for whatever purpose we want – but, prone to sudden failures that cannot really be predicted or fully controlled, and which mean the system at a whole is fundamentally less reliable. Is it really ok that any process on the system might just be terminated?

Probably, there should have been emphasis on the “any” (in “any process on the system”) to make it clear what I was really saying here, and perhaps the “system at a whole is fundamentally less reliable” is unnecessary fluff.

There’s also a question in the concluding paragraph:

Do we really trust that applications will reliably save necessary state at all times prior to crashing due to a malloc failure?

This was a misstep and very much not the question I wanted to ask; I can see how it’s misleading. The right question was the one that follows it:

Are we really ok with important system processes occasionally dying, with system functionality accordingly affected? Wouldn’t it be better if this didn’t happen?

Despite those slips, I think if you read the whole article carefully the key thrust should be apparent.

For anyone wanting for a case where an application really does need to be able to handle allocation failures, I recently stumbled across one really good example:

To start with, I write databases for a living. I run my code on containers with 128MB when the user uses a database that is 100s of GB in size. Even if running on proper server machines, I almost always have to deal with datasets that are bigger than memory. Running out of memory happens to us pretty much every single time we start the program. And handling this scenario robustly is important to building system software. In this case, planning accordingly in my view is not using a language that can put me in a hole. This is not theoretical, that is real scenario that we have to deal with.

The other example is service managers, of which I am the primary author of one (Dinit), which is largely what got me thinking about this issue in the first place. A service manager has a system-level role and if one dies unexpectedly it potentially leaves the whole system in an awkward state (and it’s not in general possible to recover just be restarting the service manager). In the worst case, a program running as PID 1 on Linux which terminates will cause the kernel to panic. (The OOM killer will not target PID 1, but it still should be able to handle regular allocation failure gracefully). However, I’m aware of some service manager projects written using languages that will not allow handling allocation failure, and it concerns me.

Hammers and nails, and operator overloads

A response to “Spooky action at a distance” by Drew DeVault.

As Abraham Maslow said in 1966, “I suppose it is tempting, if the only tool you have is a hammer, to treat everything as if it were a nail.”

Wikipedia, “Law of the Instrument“

Our familiarity with particular tools, and the ways in which they work, predisposes us in our judgement of others. This is true also with programming languages; one who is familiar with a particular language, but not another, might tend to judge the latter unfavourably based on perceived lack of functionality or feature found in the former. Of course, it might turn out that such a lack is not really important, because there is another way to achieve the same result without that feature; what we should really focus on is exactly that, the end result, not the feature.

Drew Devault, in his blog post “Spooky action at a distance”, makes the opposite error: he takes a particular feature found in other languages, specifically, operator overloading, and claims that it leads to difficulty in understanding (various aspects of the relevant) code:

The performance characteristics, consequences for debugging, and places to look for bugs are considerably different than the code would suggest on the surface

Yes, in a language with operator overloading, an expression involving an operator may effectively resolve to a function call. DeVault calls this “spooky action” and refers to some (otherwise undefined) “distance” between an operator and its behaviour (hence “at a distance”, from his title).

DeVault’s hammer, then, is called “C”. And if another language offers greater capability for abstraction than C does, that is somehow “spooky”; code written that way is a bent nail, so to speak.

Let’s look at his follow-up example about strings:

Also consider if x and y are strings: maybe “+” means concatenation? Concatenation often means allocation, which is a pretty important side-effect to consider. Are you going to thrash the garbage collector by doing this? Is there a garbage collector, or is this going to leak? Again, using C as an example, this case would be explicit:

I wonder about the point of the question “is there a garbage collector, or is this going to leak?” – does DeVault really think that the presence or absence of a garbage collector can be implicit in a one-line code sample? Presumably he does not furthermore really believe that lack of a garbage collector would necessitate a leak, although that’s implied by the unfortunate phrasing. Ironically, the C code he then provides for concatenating strings does leak – there’s no deallocation performed at all (nor is there any checking for allocation failure, potentially causing undefined behaviour when the following lines execute).

Taking C++, we could write the string concatenation example as:

std::string newstring = x + y;Now look again at the questions DeVault posed. First, does the “+” mean concatenation? It’s true that this is not certain from this one line of code alone, since in fact it depends on the types of x and y, but there is a good chance it does, and we can anyway tell by looking at the surrounding code, which of course we need to do anyway in order to truly understand what this code is doing (and why) regardless of what language it is written in. I’ll add that even if it does turn out to be difficult to determine the types of the operands from inspecting the immediately surrounding code, this is probably an indication of badly written (or badly documented) code*.

Any C++ systems programmer, with only a modest amount of experience, would also almost certainly know that string concatenation may involve heap allocation. There’s no garbage collector (although C++ allows for one, it is optional, and I’m not aware of any implementations that provide one). True, there’s still no check for allocation failure, though here it would throw an exception and most likely lead to (defined) imminent program termination instead of undefined behaviour. (Yes, the C code most likely would also terminate the program immediately if the allocation failed; but technically this is not guaranteed; and, a C programmer should know not to assume that undefined behaviour in a C program will actually behave in some certain way, despite that they might believe that they know how their code should be translated by the compiler).

So, we reduced the several-line C example to a single line, which is straight-forward to read and understand, and for which we do in fact have ready answers to the questions posed by DeVault (who seems to be taking the tack that the supposed difficulty of answering these questions contributes to a case against operator overloading).

Importantly, there’s also no memory leak, unlike in the C code, since the string destructor will perform any necessary deallocation. Would the destructor call (occurring when the string goes out of scope) also count as “spooky action at a distance”? I guess that it should, according to DeVault’s definition, although that is a bit too fuzzy to be sure. Is this “spooky action” problematic? No, it’s downright helpful. It’s also not really spooky, since as a C++ programmer, we expect it.

It’s true that C’s limitations often force code to be written in such a way that low-level details are exposed, and that this can make it easier to follow control flow, since everything is explicit. In particular, lack of user-defined operator overloading, combined with lack of function overloading, mean that types often become explicit when variables are used (the argument to strlen is, presumably, a string). But it’s easy to argue – and I do – that this doesn’t really matter. Abstractions such as operator overloading exist for a reason; in many cases they aid in code comprehension, and they don’t really obscure details (such as allocation) that DeVault suggests they do.

As a counter-example to DeVaults first point, consider:

x + foo()This is a very brief line of C code, but now we can’t say whether it performs allocation, nor talk about performance characteristics or so-forth, without looking at other parts of the code.

We got to the heart of the matter earlier on: you don’t need to understand everything about what a line of code does by looking at that line in isolation. In fact, it’s hard to see how a regular function call (in C or any other language) doesn’t in fact also qualify as “spooky action at a distance”, unless you take the stance that, since it is a function call, we know that it goes off somewhere else in the code, whereas for an “x + y” expression we don’t – but then you’re also wielding C as your hammer: the only reason you think that an operator doesn’t involve a call to a function is because you’re used to a language where it doesn’t.

* If at this stage you want to argue “but C++ makes it easy to write bad code”, be aware that you’ve gone off on a tangent; this is not a discussion about the merits or lack-thereof of C++ as a whole, we’re just using it as an example here for a discussion on operator overloading.

Escape from System D, episode VII

Summary: Dinit reaches alpha; Alpine linux demo image; Booting FreeBSD

Well, it’s been an awfully long time since I last blogged about Dinit (web page, github), my service-manager / init / wannabe-Systemd-competitor. I’d have to say, I never thought it would take this long to come this far; when I started the project, it didn’t seem such a major undertaking, but as is often the case with hobby projects, life started getting in the way.

In an earlier episode, I said:

Keeping the momentum up has been difficult, and there’s been some longish periods where I haven’t made any commits. In truth, that’s probably to be expected for a solo, non-funded project, but I’m wary that a month of inactivity can easily become three, then six, and then before you know it you’ve actually stopped working on the project (and probably started on something else). I’m determined not to let that happen – Dinit will be completed. I think the key is to choose the right requirements for “completion” so that it can realistically happen; I’ve laid out some “required for 1.0” items in the TODO file in the repository and intend to implement them, but I do have to restrain myself from adding too much. It’s a balance between producing software that you are fully happy with and that feels complete and polished.

This still holds. On the positive side, I have been chipping away at those TODOs; on the other hand I still occasionally find myself adding more TODOs, so it’s a little hard to measure progress.

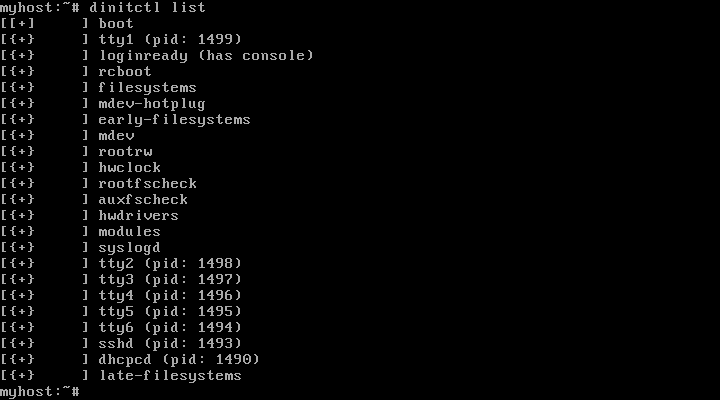

But, I released a new version just recently, and I’m finally happy to call Dinit “alpha stage” software. Meaning, in this case, that the core functionality is really complete, but various planned supporting functionality is still missing.

I myself have been running Dinit as the init and primary service manager on my home desktop system for many years now, so I’m reasonably confident that it’s solid. When I do find bugs now, they tend to be minor mistakes in service management functions rather than crashes or hangs. The test suite has become quite extensive and proven very useful in finding regressions early.

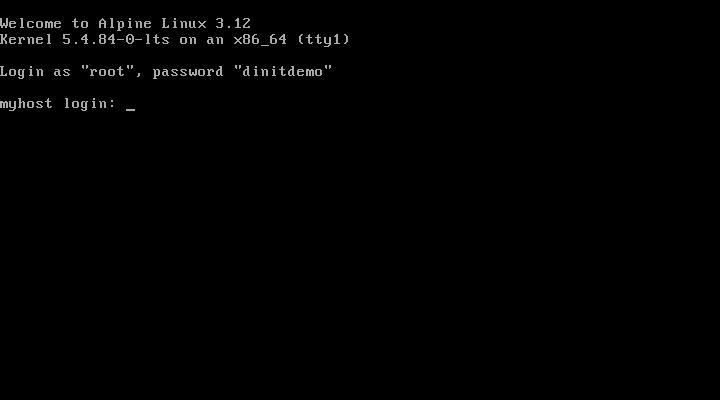

Alpine VM image

I decided to try creating a VM image that I could distribute to anyone who wanted to see Dinit in action; this would also serve as an experiment to see if I could create a system based on a distribution that was able to boot via Dinit. I wanted it to be small, and one candidate that immediately came to mind was Alpine linux.

Alpine is a Musl libc based system which normally uses a combination of Busybox‘s init and OpenRC service management (historically, Systemd couldn’t be built against Musl; I don’t know if that’s still the case. Dinit has no issues). Alpine’s very compact, so it fits the bill nicely for a base system to use with Dinit.

After a few tweaks to the example service definitions (included in the Dinit source tree), I was able to boot Alpine, including bring up the network, sshd and terminal login sessions, using Dinit! The resulting image is here, if you’d like to try it yourself.

(The main thing I had to deal with was that Alpine uses mdev, rather than udev, for device tree management. This meant adapting the services that start udev, and figuring out to get the kernel modules loaded which were necessary to drive the available hardware – particularly, the ethernet driver! Fortunately I was able to inspect and borrow from the existing Alpine boot scripts).

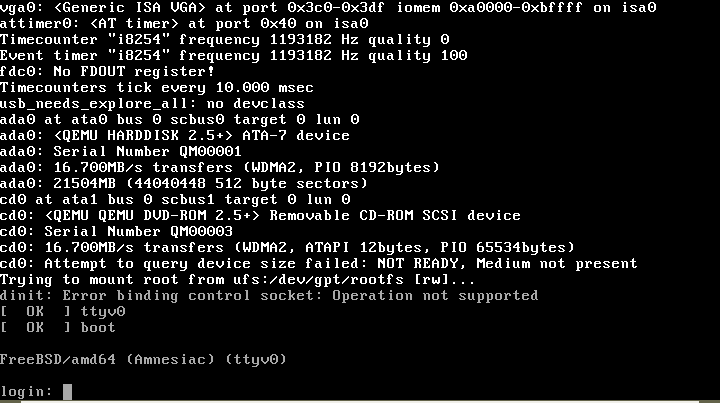

Booting FreeBSD

A longer-term goal has always been to be able to use Dinit on non-Linux systems, in particular some of the *BSD variants. Flushed with success after booting Alpine, I thought I’d also give BSD a quick try (Dinit has successfully built and run on a number of BSDs for some time, but it hasn’t been usable as the primary init on such systems).

Initially I experimented with OpenBSD, but I quickly gave up (there is no way that I could determine to boot an alternative init using OpenBSD, which meant that I had to continuously revert to a backup image in order to be able to boot again, every time I got a failure; also, I suspect that the init executable on OpenBSD needs to be statically linked). Moving on to FreeBSD, I found it a little easier – I could choose an init at boot time, so it was easy to switch back-and-forth between dinit and the original init.

However, dinit was crashing very quickly, and it took a bit of debugging to discover why. On Linux, init is started with three file descriptors already open and connected to the console – these are stdin (0), stdout (1) and stderr (2). Then, pretty much the first thing that happens when dinit starts is that it opens an epoll set, which becomes the next file descriptor (3); this actually happens during construction of the global “eventloop” variable. Later, to make sure they are definitely connected to the console, dinit closes file descriptors 0, 1, and 2, and re-opens them by opening the /dev/console device.

Now, on FreeBSD, it turns out that init starts without any file descriptors open at all! The event loop uses kqueue on FreeBSD rather than the Linux-only epoll, but the principle is pretty much the same, and because it is created early it gets assigned the first available file descriptor which in this case happens to be 0 (stdin). Later, Dinit unwittingly closes this so it can re-open it from /dev/console. A bit later still, when it tries to use the kqueue for event polling, disaster strikes!

This could be resolved by initialising the event lop later on, after the stdin/out/err file descriptors were open and connected. Having done that, I was also able to get FreeBSD to the point where it allowed login on a tty! (there are some minor glitches, and in this case I didn’t bother trying to get network and other services running; that can probably wait for a rainy day – but in principle it should be possible!).

Wrap-up

So, Dinit has reached alpha release, and is able to boot Alpine Linux and FreeBSD. This really feels like progress! There’s still some way to go before a 1.0 release, but we’re definitely getting closer. If you’re interested in Dinit, you might want to try out the Alpine-Dinit image, which you can run with QEMU.

Is C++ type-safe? (There’s two right answers)

I recently allowed myself to be embroiled in an online discussion regarding Rust and C++. It started with a comment (from someone else) complaining how Rust advocates have a tendency to hijack C++ discussions and suggesting that C++ was type-safe, which was responded to by a Rust advocate first saying that C++ wasn’t type-safe (because casts, and unchecked bounds accesses, and unchecked lifetime), and then going on to make an extreme claim about C++’s type system which I won’t repeat here because I don’t want to re-hash that particular argument. Anyway, I weighed in trying to make the point that it was a ridiculous claim, but also made the (usual) mistake of also picking at other parts of the comment, in this case regarding the type-safety assertion, which is thorny because I don’t know if many people really understand properly what “type-safety” is (I think I somewhat messed it up myself in that particular conversation).

So what exactly is “type-safety”? Part of the problem is that it is an overloaded term. The Rust advocate picked some parts of the definition from the wikipedia article and tried to use these to show that C++ is “not type-safe”, but they skipped the fundamental introductory paragraph, which I’ll reproduce here:

In computer science, type safety is the extent to which a programming language discourages or prevents type errors

https://en.wikipedia.org/wiki/Type_safety

I want to come back to that, but for now, also note that it offers this, on what constitutes a type error:

A type error is erroneous or undesirable program behaviour caused by a discrepancy between differing data types for the program’s constants, variables, and methods (functions), e.g., treating an integer (int) as a floating-point number (float).

… which is not hugely helpful because it doesn’t really say it means to “treat” a value of one type as another type. It could mean that we supply a value (via an expression) that has a type not matching that required by an operation which is applied to it, though in that case it’s not a great example, since treating an integer as a floating point is, in many languages, perfectly possible and unlikely to result in undesirable program behaviour; it could perhaps also be referring to type-punning, the process of re-interpreting a bit pattern which represents a value on one type as representing a value in another type. Again, I want to come back to this, but there’s one more thing that ought to be explored, and that’s the sentence at the end of the paragraph:

The formal type-theoretic definition of type safety is considerably stronger than what is understood by most programmers.

I found quite a good discussion of type-theoretic type safety in this post by Thiago Silva. They discuss two definitions, but the first (from Luca Cardelli) at least boils down to “if undefined behaviour is invoked, a program is not type-safe”. Now, we could extend that to a language, in terms of whether the language allows a non-type-safe program to be executed, and that would make C++ non-type-safe. However, also note that this form of type-safety is a binary: a language either is or is not type-safe. Also note that the definition here allows a type-safe program to raise type errors, in contrast to the introductory statement from wikipedia, and Silva implies that a type error occurs when an operation is attempted on a type to which it doesn’t apply, that is, it is not about type-punning:

In the “untyped languages” group, he notes we can see them equivalently as “unityped” and, since the “universal type” type checks on all operations, these languages are also well-typed. In other words, in theory, there are no forbidden errors (i.e. type errors) on programs written in these languages

Thiago Silva

I.e. with dynamic typing “everything is the same type”, and any operation can be applied to any value (though doing so might provoke an error, depending on what the value represents), so there’s no possibility of type error, because a type error occurs when you apply an operation to a type for which it is not allowed.

The second definition discussed by Silva (i.e. that of Benjamin C. Pierce) is a bit different, but can probably be fundamentally equated with the first (consider “stuck” as meaning “has undefined behaviour” when you read Silva’s post).

This notion of type error as an operation illegal on certain argument type(s) is also supported by a quote from the original wiki page:

A language is type-safe if the only operations that can be performed on data in the language are those sanctioned by the type of the data.

Vijay Saraswat

So where are we? In formal type-theoretic language, we would say that:

- type safety is (confusingly!) concerned with whether a program has errors which result in arbitrary (undefined) behaviour, and not so much about type errors

- in fact, type errors may be raised during execution of a type-safe program.

- C++ is not type-safe, because it has undefined behaviour

Further, we have a generally-accepted notion of type error:

- a type error is when an attempt is made to apply an operation to a type of argument to which it does not apply

(which, ok, makes the initial example of a type error on the wikipedia page fantastically bad, but is not inconsistent with the page generally).

Now, let me quote the introductory sentence again, with my own emphasis this time:

In computer science, type safety is the extent to which a programming language discourages or prevents type errors

This seems to be more of a “layman’s definition” of type safety, and together with the notion of type error as outlined above, certainly explains why the top-voted stackoverflow answer for “what is type-safe?” says:

Type safety means that the compiler will validate types while compiling, and throw an error if you try to assign the wrong type to a variable

That is, static type-checking certainly is designed to prevent operations that are illegal according to argument type from being executed, and thus have a degree of type-safety.

So, we have a formal definition of type-safety, which in fact has very little to do with types within a program and more to do with (the possibility of) undefined behaviour; and we have a layman’s definition, which says that type-safety is about avoiding type errors.

The formal definition explains why you can easily find references asserting that C++ is not type-safe (but that Java, for example, is). The informal definition, on the other hand, clearly allows us to say that C++ has reasonably good type-safety.

Clearly, it’s a bit of a mess.

How to resolve this? I guess I’d argue that “memory-safe” is a better understood term than the formal “type-safe”, and since in many cases lack of the latter results from lack of the former we should just use it as the better of the two (or otherwise make specific reference to “undefined behaviour”, which is probably also better understood and less ambiguous). For the layman’s variant we might use terms like “strongly typed” and “statically type-checked”, rather than “type-safe”, depending on where exactly we think the type-safety comes from.